How crawling and indexing works in Blogger? This is a very common question comes in ones mind. This is also a very important question. A blog or website holder should have the knowledge to manage his crawling and indexing option. For this purpose it is very necessary to know What are web crawlers, spiders or bots?

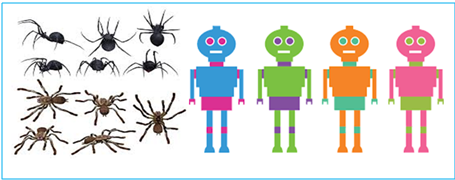

What are web spiders, crawlers or bots?

Spiders or crawlers are software programs set by search engines to find new information. When a user enter his keyword to search engines. These spiders crawls for the required data indexed in the search engines for that particular keyword.

|

| How Crawling and Indexing Works in Blogger? Why Robot.txt file is Important? |

Now we shall discuss how to manage crawling and indexing in our blogger dashboard?

- Sign in to blogger dashboard

- In dashboard go to settings

- In settings go to search preferences

- Go to Crawlers and indexing

- go to Custom robots.txt

- Click on Edit

- In enable tab click Yes

- in the box below paste this code

- Replace URL with your own

- Click save changes

This Robot.txt file is a notepad file with extension .txt which tells to search engines for pages crawl and not to crawl. Now if you want to check how much pages of your website or blog are indexed in search engine. Then type this code in search engine and click enter. site:"yourdomain.com". All the pages shown below are indexed in search engine for crwling.

If this post was helpful, please share with your friends. Follow my blog with your email and support me with your worthy opinion through comments section.

8 Comments

greats sir

ReplyDeleteThanks for your support. Please be connected for more posts.

DeleteVery nice post. I found answer to my question. I can now manage my robot.txt setting. Thanks

ReplyDeleteThanks SUFI. I am really happy that you have found your answer from this post.

Deletevery nice post.

ReplyDeletethanks for commenting here.

DeleteWow. Valuable post. It's content's are very important. Thank you.

ReplyDeletegreat sir

ReplyDeleteI am very grateful and thankful to you for your worthy comments. Please do not tag spam links in comments.